Privacy-Preserving Federated Learning for Healthcare Data Sharing

Main Article Content

Abstract

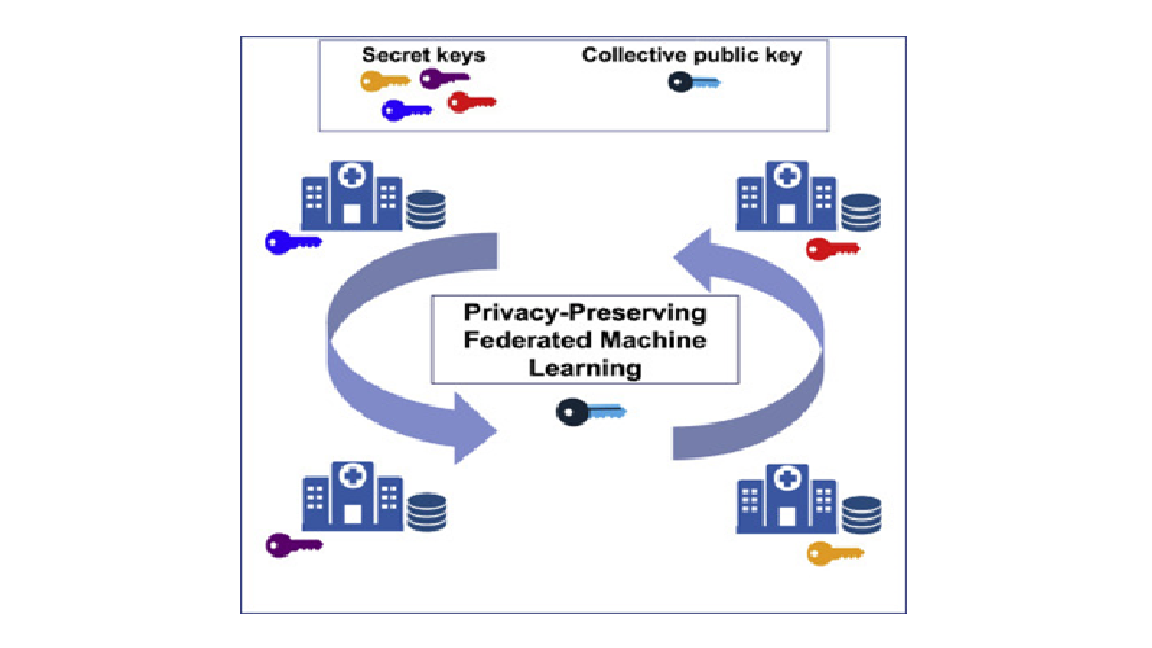

The rapid digitalization of healthcare has led to an unprecedented accumulation of sensitive patient data. Federated Learning (FL) has emerged as a promising paradigm that enables collaborative model training across multiple healthcare institutions without exposing raw data. However, FL remains susceptible to various privacy risks, including membership inference attacks, data reconstruction, and model inversion. To address these challenges, privacy-preserving FL techniques, such as differential privacy, secure multi-party computation, and homomorphic encryption, have been developed to safeguard patient information while maintaining model utility. Additionally, decentralized approaches incorporating blockchain and fairness-aware mechanisms further enhance security and model generalizability. This paper explores the latest advancements in privacy-preserving FL for healthcare, discussing trade-offs between privacy, efficiency, and model performance. We also highlight open challenges and future directions for ensuring robust, scalable, and ethically responsible FL implementations in real-world medical settings.