Advancements in Neural Architecture Search for Automated Model Design

Main Article Content

Abstract

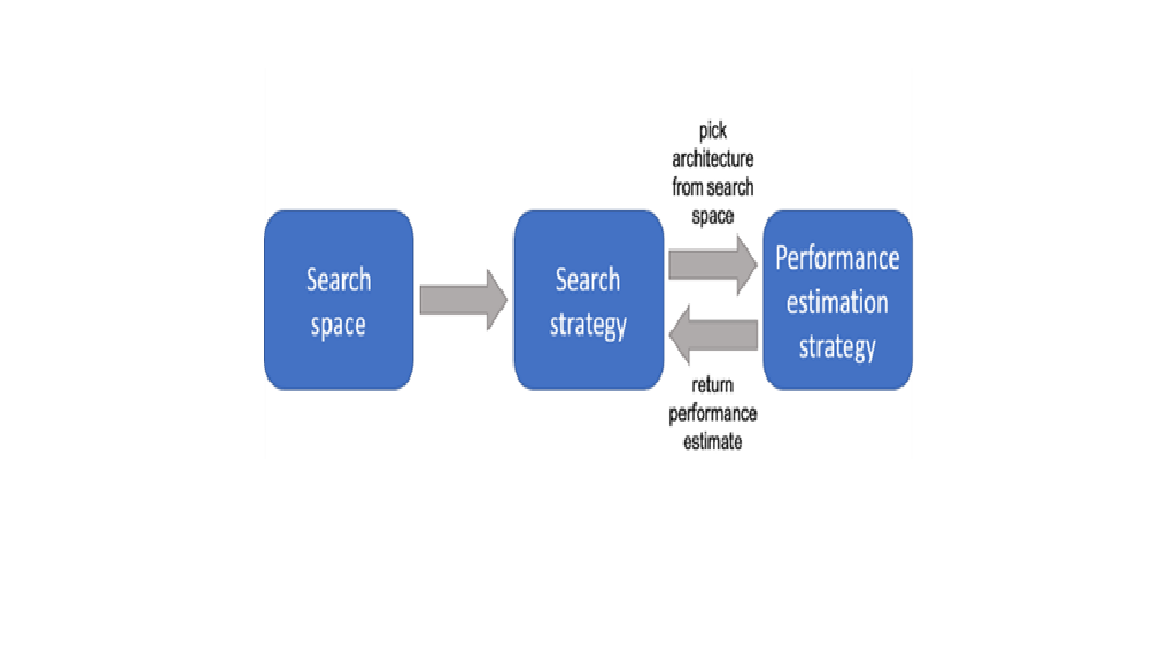

Neural Architecture Search (NAS) has emerged as a transformative approach to automating the design of deep learning models, significantly reducing human effort and expertise in model architecture engineering. This paper reviews recent advancements in NAS techniques, including differentiable search methods, reinforcement learning-based approaches, and evolutionary algorithms. We explore the impact of these methods on model efficiency, scalability, and accuracy across various tasks such as image classification, natural language processing, and reinforcement learning. Furthermore, we discuss the integration of hardware-aware optimization strategies that balance model complexity with real-world deployment constraints. The convergence of NAS with self-supervised learning and foundation models is examined, highlighting a paradigm shift toward generalized and automated AI systems. Despite its progress, challenges remain, including high computational costs, limited generalizability, and the trade-off between exploration and exploitation in search strategies. We conclude by outlining future research directions, emphasizing the need for sustainable and interpretable NAS frameworks that democratize access to state-of-the-art AI models across diverse applications.

Downloads

Article Details

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.