Explainable AI in Healthcare: Interpretable Models for Clinical Decision Support

Main Article Content

Abstract

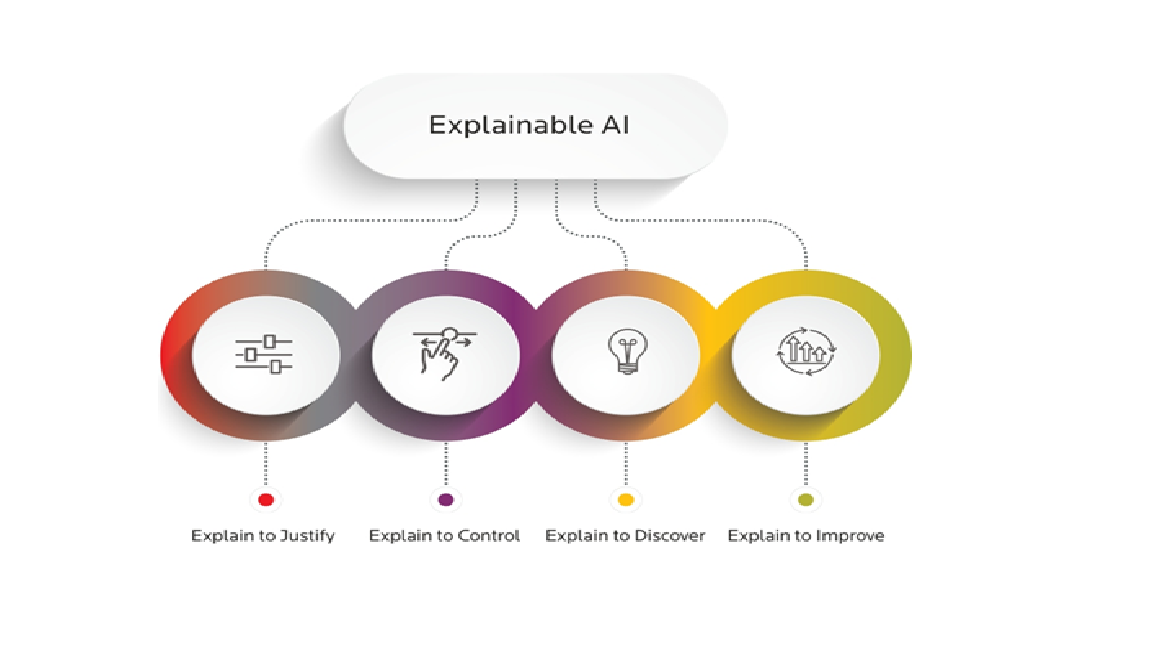

The integration of Artificial Intelligence (AI) in healthcare has led to significant advancements in clinical decision support systems (CDSS). However, the complexity and opacity of many AI models raise concerns about their trustworthiness, adoption, and regulatory compliance. Explainable AI (XAI) seeks to address these challenges by developing interpretable models that enhance transparency, reliability, and human-AI collaboration in medical decision-making. This paper explores various XAI techniques applied to healthcare, including rule-based models, attention mechanisms, feature attribution methods, and surrogate explainability models. We discuss their impact on improving clinician trust, patient safety, and regulatory acceptance. Additionally, we highlight key challenges, such as trade-offs between interpretability and accuracy, biases in model explanations, and the need for standardized evaluation frameworks. By fostering explainability in AI-driven healthcare systems, we aim to bridge the gap between algorithmic decision-making and clinical expertise, ultimately improving patient outcomes and ethical AI adoption in medicine.