Privacy-Preserving Machine Learning Techniques for Healthcare Data Analysis

Main Article Content

Abstract

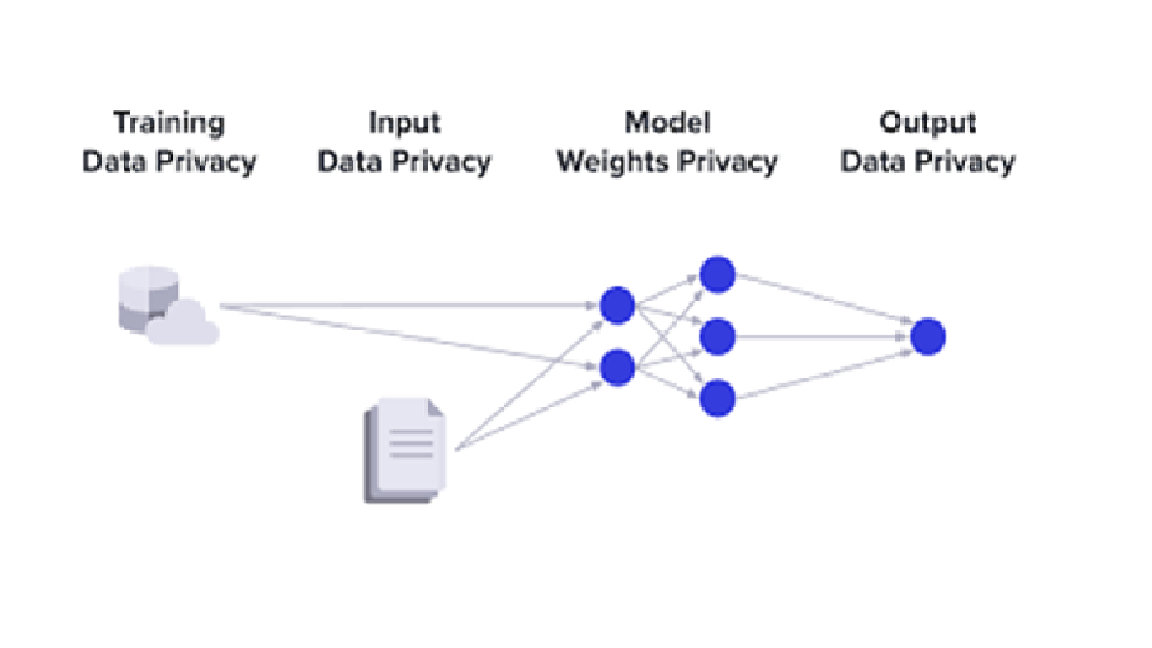

Privacy-preserving machine learning (PPML) has emerged as a critical field in healthcare data analysis, addressing concerns related to data security, confidentiality, and regulatory compliance. Traditional machine learning approaches require access to vast amounts of patient data, posing significant risks of data breaches and unauthorized access. To mitigate these challenges, PPML techniques leverage cryptographic methods, differential privacy, federated learning, and secure multi-party computation to enable collaborative and privacy-aware data processing. This paper explores the latest advancements in PPML for healthcare applications, examining key techniques such as homomorphic encryption, secure aggregation, and privacy-preserving deep learning models. Furthermore, we discuss the trade-offs between privacy, computational efficiency, and model performance, highlighting the challenges and potential solutions. By enabling secure and ethical machine learning applications, PPML plays a pivotal role in advancing precision medicine, medical diagnostics, and predictive analytics while ensuring compliance with data protection regulations such as HIPAA and GDPR. Future directions emphasize the need for scalable and interoperable PPML frameworks to support widespread adoption in real-world healthcare environments.