Text Summarization Using a Hybrid Approach

Main Article Content

Abstract

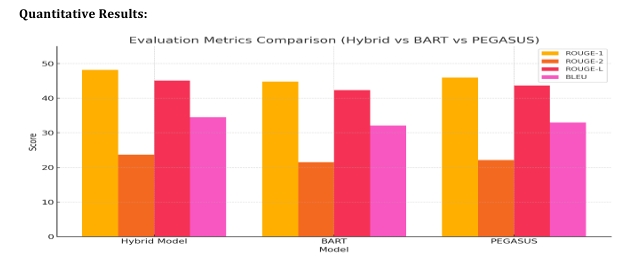

Text summarization is a crucial task in Natural Language Processing (NLP), enabling the automatic generation of concise and meaningful summaries from large textual content. It has wide-ranging applications in areas such as legal document analysis, news summarization, and academic research. This paper proposes a hybrid approach that combines both extractive and abstractive summarization techniques to generate high-quality summaries. The extractive component selects the most informative sentences based on statistical techniques, while the abstractive component rephrases and restructures these sentences using deep learning models like the T5 transformer from Hugging Face. Our implementation, developed using Python's NLTK and Transformers library, demonstrates improved summary coherence and retention of key information. Evaluation metrics such as ROUGE and BLEU scores validate the effectiveness of our approach, showing superior results over standalone models like BART.