Adversarial Machine Learning: Attacks and Defenses in Deep Neural Networks

Main Article Content

Abstract

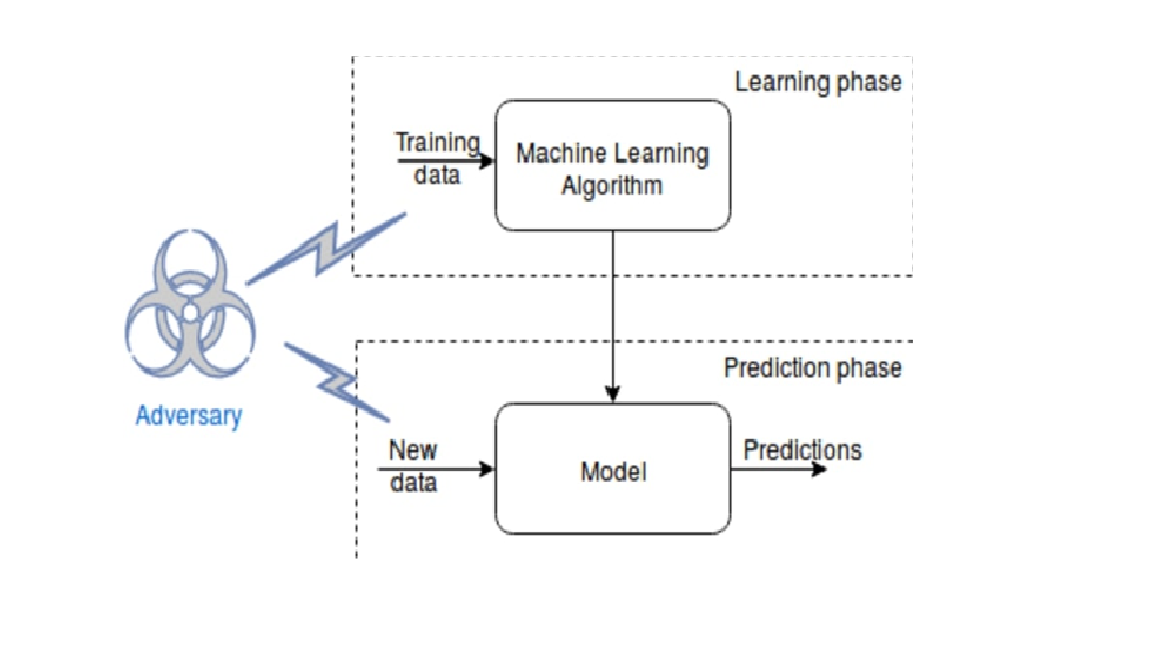

Deep neural networks (DNNs) have achieved remarkable success across a wide range of applications, from image recognition to natural language processing. However, their vulnerability to adversarial attacks—deliberate perturbations crafted to mislead models—has raised significant concerns regarding their deployment in security-critical systems. This paper provides a comprehensive overview of adversarial machine learning, focusing on both attack strategies and defense mechanisms. We categorize and analyze various adversarial attack methods, including gradient-based, optimization-based, and transfer-based approaches. Additionally, we explore state-of-the-art defenses designed to improve model robustness, such as adversarial training, defensive distillation, and input transformation techniques. By examining the interplay between adversaries and defenders, we highlight the ongoing arms race in adversarial machine learning and discuss open challenges and future research directions for building more secure and trustworthy DNN-based systems.