Stock Price Prediction Using TD3

Main Article Content

Abstract

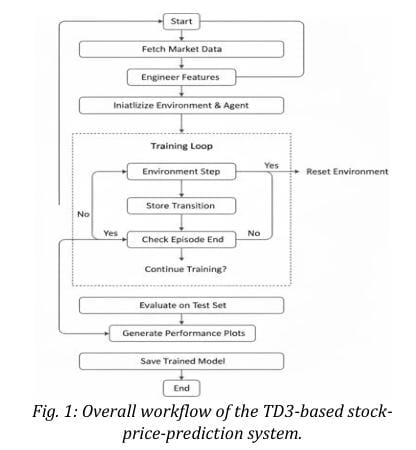

Financial markets are complex, dynamic, and highly non-linear systems where conventional rule-based trading models struggle to adapt to changing price patterns. This work presents a reinforcement-learning-driven framework for stock price prediction and trading strategy optimization based on the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm. The proposed system integrates data preprocessing, environment simulation, and policy training into a reproducible end-to-end pipeline that functions efficiently even on limited hardware resources

The study addresses key challenges such as non-stationarity, transaction costs, and risk-adjusted performance by embedding drawdown penalties and portfolio constraints directly into the learning process. Unlike discrete-action methods, TD3 enables continuous position sizing, leading to smoother and more realistic trade execution. The implementation follows an Agile development approach, ensuring iterative validation and reproducibility across data sources. Evaluation metrics such as Sharpe ratio, total return, and maximum drawdown are employed to assess agent performance

Experimental results validate that modern reinforcement-learning techniques can produce adaptive, risk-aware trading policies capable of outperforming traditional heuristic systems. The proposed architecture thus bridges the gap between theoretical DRL algorithms and practical algorithmic-trading applications, offering a scalable foundation for future quantitative-finance research.