ADGAN++: A Deep Framework for Controllable and Realistic Face Synthesis

Main Article Content

Abstract

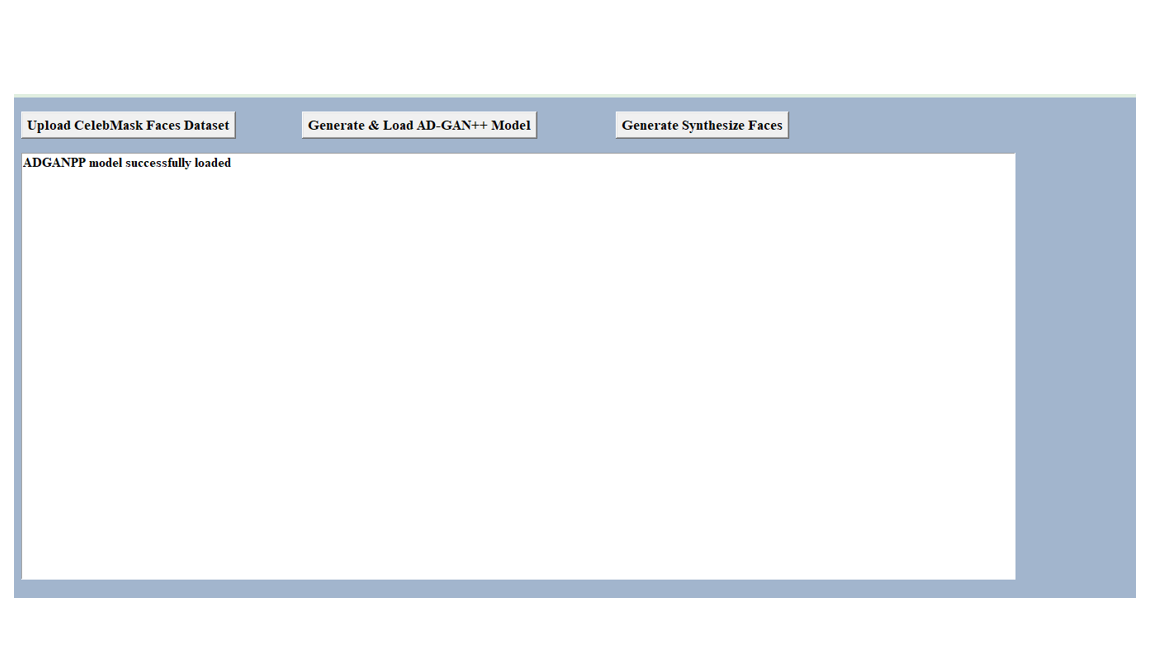

The advancement of Generative Adversarial Networks (GANs) has led to significant progress in image synthesis tasks. This paper introduces an enhanced model, ADGAN++, which builds upon the foundational Attribute-Decomposed GAN (ADGAN) architecture to improve controllable and realistic image generation. ADGAN++ enables the generation of images with user-defined attributes by embedding component characteristics into the latent space as separate, manipulable vectors. Unlike its predecessor, ADGAN++ adopts a serial encoding approach that synthesizes each image component individually, allowing for better handling of complex attribute variations. The model is trained on the CelebMask Faces dataset, focusing on facial image synthesis. It offers capabilities such as editing, pose transfer, and multi-style generation by combining source and target image attributes. The model’s effectiveness is quantitatively evaluated using the Structural Similarity Index (SSIM), which confirms the visual consistency between original and generated outputs. This work demonstrates the potential of ADGAN++ in applications requiring fine-grained control over image features, especially in facial synthesis and editing tasks.