Educational Video Time-Stamped based on Texttiling with Sentence-BERT Embeddings

Main Article Content

Abstract

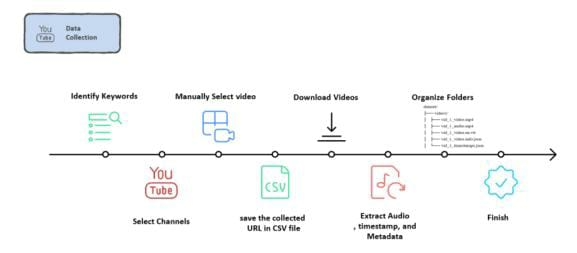

Automatically segmenting long educational videos into coherent thematic units is essential for improving content navigation, enabling targeted review, and supporting downstream applications such as interactive video indexing and summarization. In this work, we propose an unsupervised, transcript-based segmentation method that enhances the classical TextTiling algorithm by incorporating contextual Sentence-BERT embeddings to detect topic transitions as drops in semantic coherence between consecutive segments, without requiring labeled training data. To evaluate our approach, we introduce EVTS (Educational Video Timestamp Segmentation), a large-scale dataset of 1,553 real-world educational YouTube videos, each annotated with creator-provided timestamps marking the start of distinct instructional segments. Experimental results show that our method aligns well with these human-defined boundaries, achieving an F1 score of 0.665, a precision of 0.735, and a Pk of 0.365 under an adaptive configuration. These findings indicate that the proposed approach effectively captures meaningful topic shifts and produces accurate, time-stamped segments suitable for real-world educational applications, even when evaluated against noisy, non-expert annotations.

Article Details

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.