Arabic Summarization Bases on Encoder-Decoder Model

Main Article Content

Abstract

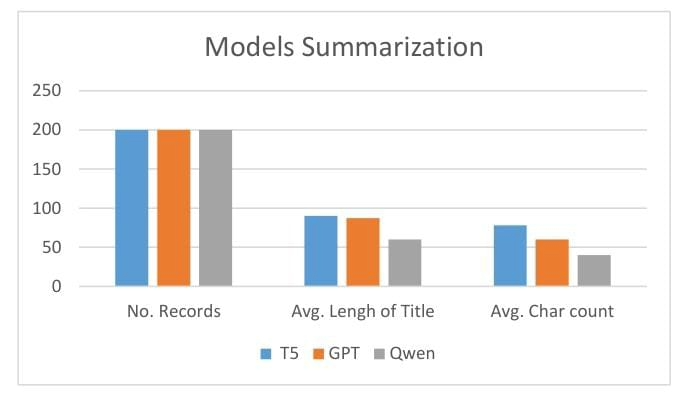

A study conducted by several scholars discusses the abstraction of an Arabic text model through deep learning and natural language processing by developing an abstract model for summarizing Arabic text. The training of the T5 architecture is customized for the Arabic text assignment. A model with a well-summarized 89.4% accurate summary is derived and tested from diverse Arabic sources. Similar data was fed into the models for efficiency comparison. To summarize, the studies come to one point that the combination of innovative converter technologies and deep coding is a promising way for generating intelligent solutions for Arabic content summarization. This implementation can assist with tackling the immense amount of information precisely and fast.

Article Details

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.