Sign Language Recognition using Deep Learning

Main Article Content

Abstract

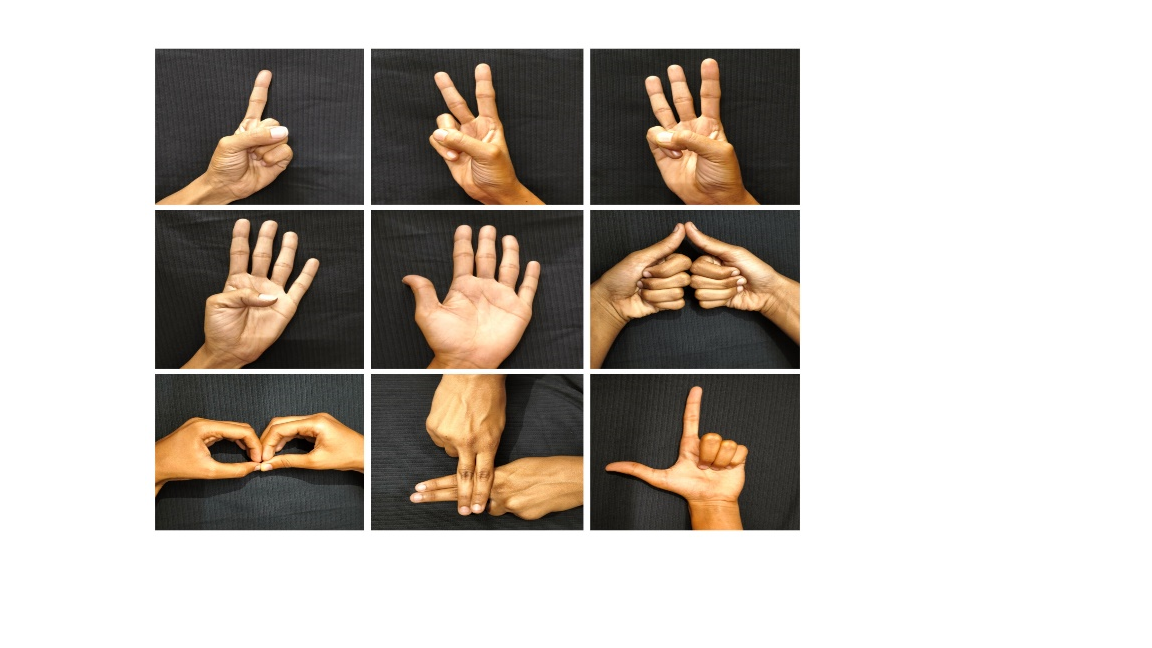

Sign language is a vital way for people with hearing impairments to communicate, but unfortunately, many of us don't know how to use it. That's where technology comes in! Sign language recognition systems use artificial intelligence and computer vision to translate sign gestures into text or speech. Sign language recognition (SLR) systems help by using artificial intelligence (AI) and computer vision to convert sign gestures into text or speech. This study proposes a convolutional neural network (CNN)-based SLR model for recognizing numeric gestures in sign language. The proposed model is trained on a digit and alphabet-based dataset to ensure accurate classification of hand gestures. In this study, we developed a model which based on deep learning and recognize the hand gestures perfectly. Our experimental results show that the proposed Sequential model and Dense201 model on pre-trained dataset. The sequential model achieved accuracy 95.43 while dense model performed better and show accuracy 99.41, to perfectly recognize the gestures. These results show that our approach is highly effective in correctly identifying sign language gestures.