LoMar: A Secure Federated Learning Approach Against Model Poisoning Attacks

Main Article Content

Abstract

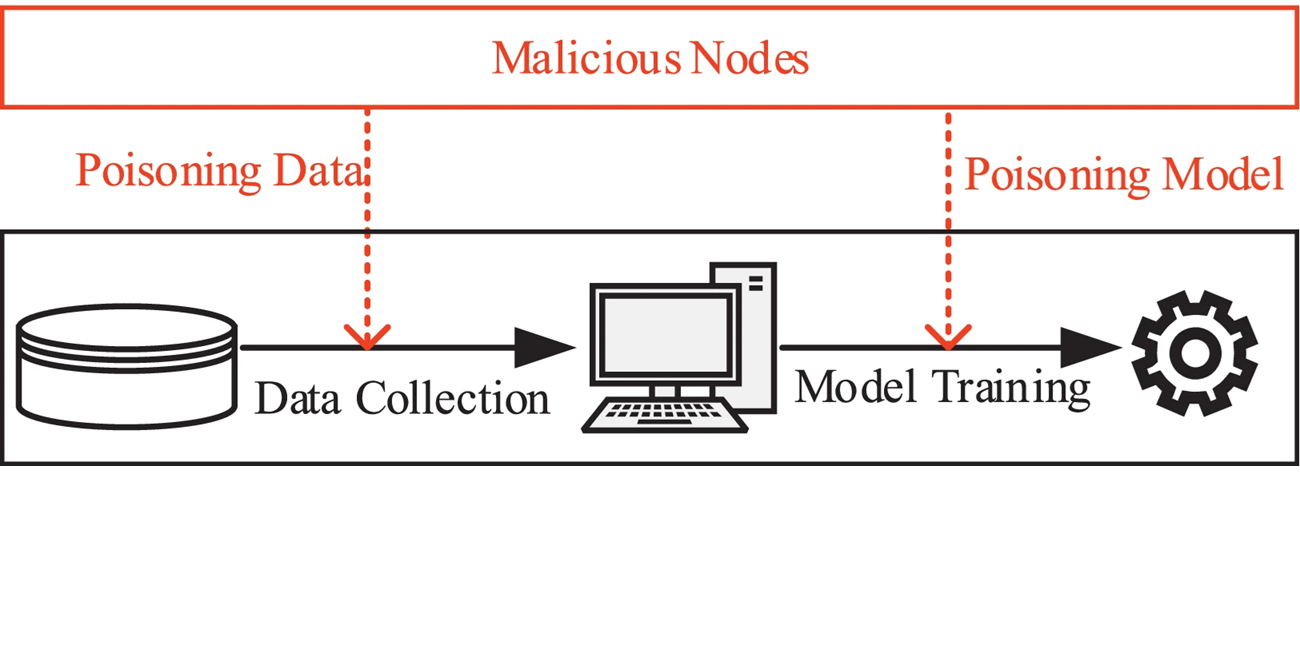

With the widespread adoption of Federated Learning (FL) in domains requiring data privacy, such as healthcare, finance, and mobile intelligence, the challenge of model integrity has become increasingly critical. Although FL preserves user privacy by keeping data local and sharing only model updates with a central server, it remains vulnerable to poisoning attacks, where adversaries manipulate local training data to compromise global model performance. In this study, we present LoMar (Local Model Anomaly Rejection)—a lightweight and effective defense mechanism against such poisoning attacks in FL environments. LoMar leverages Kernel Density Estimation (KDE) to evaluate the distribution of client model updates. By measuring deviations from expected update patterns using neighborhood density, LoMar detects and filters out anomalous or malicious model submissions before they influence the global model aggregation.To demonstrate the effectiveness of LoMar, we simulate poisoning by intentionally mislabeling training data within the MNIST digit classification task. The system architecture includes a server module and multiple client applications, with genuine and poisoned model versions being separately uploaded. The server-side implementation of LoMar successfully identifies poisoned models based on KDE threshold evaluations, ensuring only legitimate updates are aggregated. Furthermore, we introduce an extension mechanism involving model compression to minimize communication overhead. This reduces model size by approximately 10%, improving transmission speed and bandwidth efficiency without sacrificing model accuracy.Experimental results show that LoMar not only maintains high classification accuracy in the presence of poisoning but also significantly outperforms FL systems lacking defensive mechanisms. The integration of model compression further enhances system scalability, making LoMar a robust, practical solution for secure and efficient federated learning in real-world scenarios.