Illuminating Low-Light Scenes: YOLOv5 and Federated Learning for Autonomous Vision

Main Article Content

Abstract

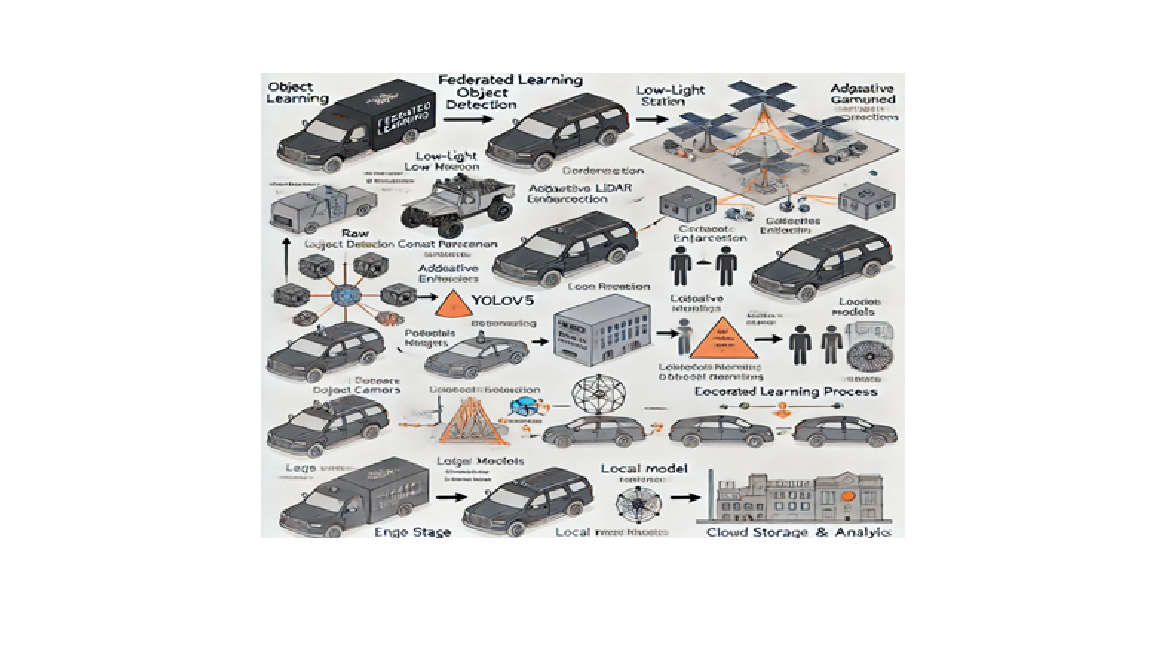

Object detection plays a pivotal role in the safe navigation of autonomous vehicles, particularly under challenging low-light conditions where traditional vision-based systems often fail. This study presents an advanced approach to enhancing object detection in such environments using Federated Learning in conjunction with the YOLOv5 (You Only Look Once, Version 5) deep learning model. We curated and trained the model on a comprehensive vehicle dataset comprising both high and low-light images, encompassing five critical classes: Bus, Car, Motorbike, Truck, and Person.Our approach leverages a decentralized training framework where individual clients train YOLOv5 models locally and transmit the learned parameters to a central server for aggregation, enabling privacy-preserving, scalable model updates across multiple edge devices. A specialized image illumination step is integrated into the pipeline to enhance visibility in low-light images prior to detection. The system's architecture includes modules for dataset upload, model training and loading, federated model updates, real-time low-light detection, and performance evaluation through metrics such as precision, recall, and loss graphs.Experimental results demonstrate the effectiveness of the proposed model in accurately detecting objects under varying lighting conditions, with significant improvements observed in model performance metrics over training epochs. This federated learning-based solution offers a secure, efficient, and robust framework for real-time object detection in autonomous driving applications, particularly enhancing visual perception during nighttime or low-visibility scenarios.