Mood Sync: Personalized Music and Driver Safety through Facial Emotion Recognition

Main Article Content

Abstract

With the latest advancements in Deep Learning models and frameworks, we can tackle more complex problems than ever before. In this paper, we focus on two key areas: detecting drowsiness and recognizing emotions. Our goal is to create a system that can understand a driver's emotional and physical state, and respond appropriately. By alerting the driver when signs of fatigue are detected and suggesting music based on their current emotions, we aim to enhance their driving experience.

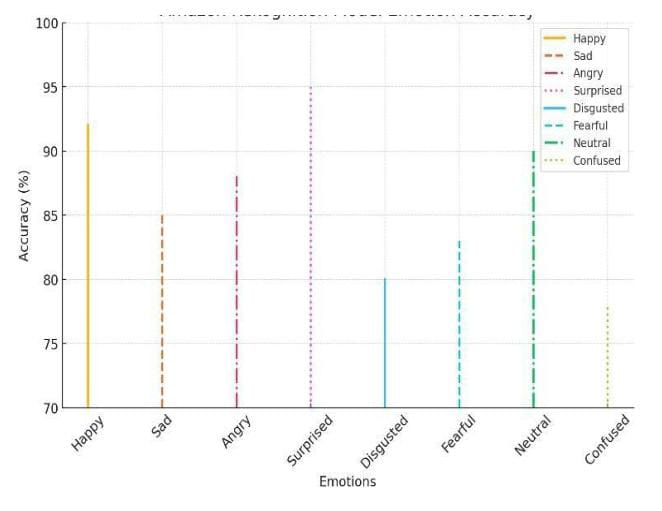

For drowsiness detection, we use the dlib library and a facial landmark shape predictor to monitor the driver's eye conditions in real time. If the eyelids stay closed for a short period, an alert is triggered to wake the driver. Additionally, we incorporate AWS Rekognition to improve facial emotion detection, AWS Polly to generate audio alerts, and AWS S3 buckets to efficiently store and manage data. This integrated approach not only ensures driver safety but also personalizes their journey with music that suits their mood.

Downloads

Article Details

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.