Robotic Perception and Manipulation in Unstructured Environments

Main Article Content

Abstract

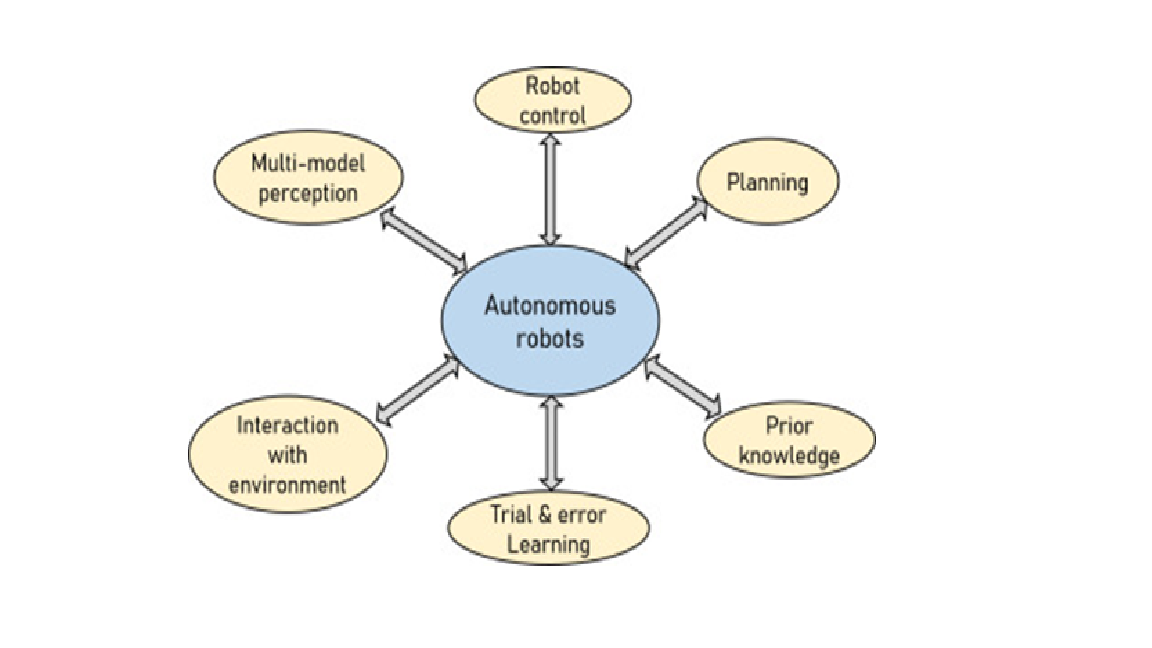

Robots operating in unstructured environments must perceive, interpret, and interact with dynamic, unpredictable surroundings. Unlike controlled settings, these environments present challenges such as occlusions, clutter, deformable objects, and varying lighting conditions. Recent advancements in artificial intelligence, computer vision, and sensor fusion have enabled robots to enhance their perception capabilities, allowing them to localize objects, recognize affordances, and predict physical interactions. Simultaneously, developments in motion planning, grasp synthesis, and reinforcement learning have improved robotic manipulation, enabling robots to adapt to real-world variability. This paper reviews state-of-the-art approaches in robotic perception and manipulation, emphasizing learning-based methods, multimodal sensing, and active perception strategies. We also discuss challenges and future directions in enabling robots to autonomously interact with unstructured environments across domains such as industrial automation, service robotics, and search-and-rescue operations.