Federated Learning Frameworks for Privacy-Preserving Collaborative Machine Learning

Main Article Content

Abstract

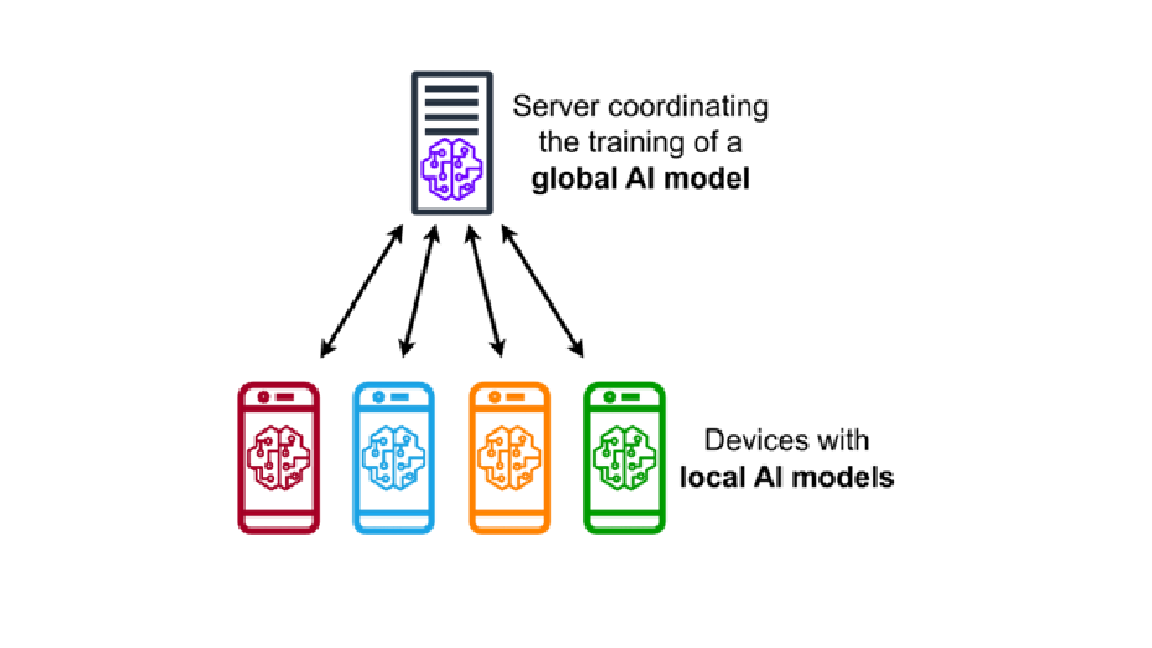

Federated Learning (FL) is a transformative paradigm that enables collaborative machine learning across multiple decentralized data sources without requiring direct data sharing. This approach has emerged as a critical solution for privacy-preserving machine learning, particularly in sensitive domains such as healthcare, finance, and IoT systems. FL frameworks employ advanced techniques such as secure aggregation, differential privacy, and homomorphic encryption to protect user data while facilitating model convergence. These frameworks address key challenges, including communication efficiency, data heterogeneity, and robustness against malicious actors. This paper reviews the state-of-the-art FL frameworks, exploring their architecture, privacy-preserving techniques, and application scenarios. Furthermore, it highlights recent advancements in secure federated learning protocols, multi-party computation strategies, and scalability improvements. The discussion extends to open challenges, such as ensuring fairness, optimizing resource consumption, and maintaining security guarantees in adversarial settings. By advancing the development of privacy-preserving FL frameworks, researchers and practitioners can unlock the potential of collaborative machine learning while upholding stringent data privacy standards.