Towards Explainable Artificial Intelligence: Interpretable Models and Techniques

Main Article Content

Abstract

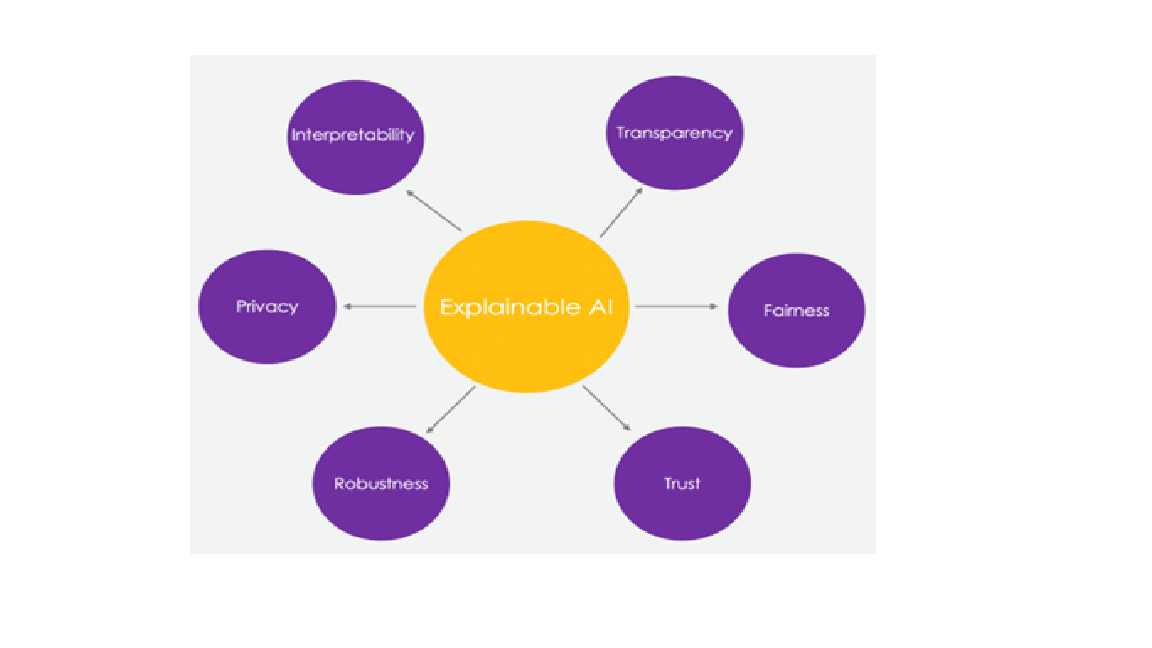

The rapid advancement of artificial intelligence (AI) has led to its integration into critical domains such as healthcare, finance, and autonomous systems, where understanding and trust in AI decisions are paramount. While deep learning models often achieve state-of-the-art performance, their complex, black-box nature limits their interpretability. This paper explores the growing field of explainable AI (XAI), focusing on methods and techniques for enhancing the interpretability of AI models. We examine various approaches, including model-specific techniques like decision trees and rule-based systems, and model-agnostic methods such as feature importance, local explanations, and surrogate models. Furthermore, we discuss the trade-offs between accuracy and interpretability, providing a comprehensive review of the current landscape and future challenges. By promoting transparency in AI, this research aims to improve user trust, ensure fairness, and facilitate the deployment of AI systems in safety-critical applications.