Generative AI Concepts for Data Privacy Protection, Understanding the Potential Risk Mitigation

Main Article Content

Abstract

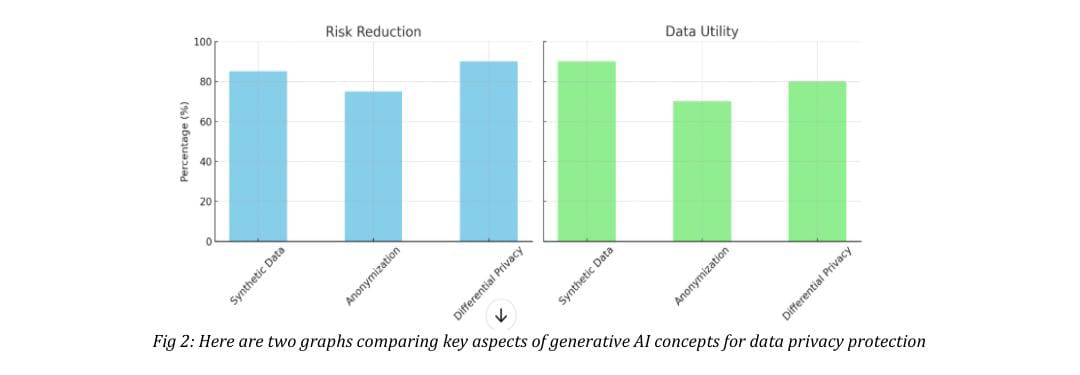

Data-driven innovation has made generative artificial intelligence (AI) a potent instrument that can revolutionize industries. But its capabilities also pose hazards to data privacy, bringing up serious issues with synthetic data fabrication, re-identification of anonymised data, and unauthorized data usage. By examining the relationship between generative AI and data privacy, this paper offers a thorough grasp of the possible hazards associated with it as well as mitigating techniques. The influence on privacy of key generative AI concepts, such as deep generative models like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), is explored. It is addressed how generative AI has two uses: it can create synthetic datasets that safeguard sensitive data, but it may also be used to reconstruct or abuse personal data. This work proposes a framework for privacy protection in generative AI systems, focusing on differential privacy, federated learning, and ethical AI practices as cornerstones for risk mitigation. By integrating these methodologies, organizations can leverage generative AI for innovation without compromising individual privacy. To guarantee the ethical use of generative AI in delicate fields like healthcare, finance, and social media, this study emphasizes the necessity of legal frameworks and technological protections.