Ethical Considerations in AI: Bias Mitigation and Fairness in Algorithmic Decision Making

Main Article Content

Abstract

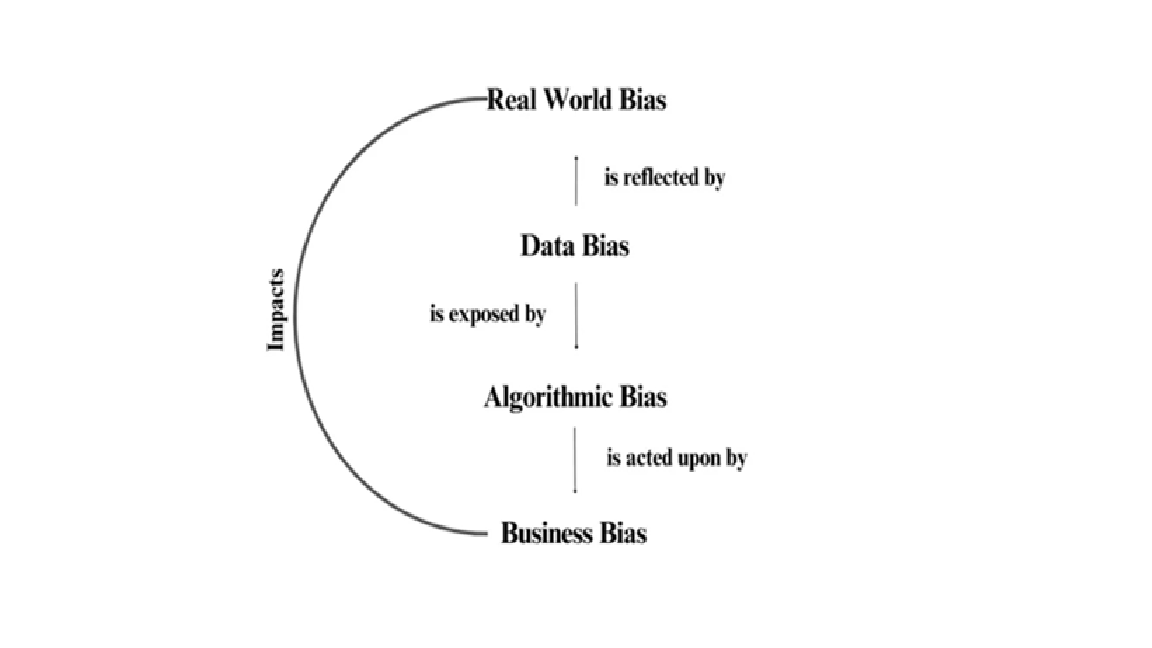

The rapid integration of artificial intelligence (AI) into critical decision-making domains—such as healthcare, finance, law enforcement, and hiring—has raised significant ethical concerns regarding bias and fairness. Algorithmic decision-making systems, if not carefully designed and monitored, risk perpetuating and amplifying societal biases, leading to unfair and discriminatory outcomes. This paper explores the ethical considerations surrounding AI, focusing on bias mitigation and fairness in algorithmic systems. We examine the sources of bias in AI models, including biased training data, algorithmic design choices, and systemic inequities. Furthermore, we review existing approaches to bias mitigation, such as fairness-aware machine learning techniques, adversarial debiasing, and regulatory frameworks that promote transparency and accountability. The paper also discusses the trade-offs between fairness, accuracy, and interpretability, emphasizing the need for interdisciplinary collaboration to develop ethical AI systems. By analyzing current challenges and emerging solutions, this study provides a roadmap for responsible AI development that prioritizes fairness, reduces bias, and fosters trust in automated decision-making.