Citizen-Led AI Audit Platform for Transparency and Accountability in Automated Decision-Making

Main Article Content

Abstract

Artificial Intelligence (AI) and automated decision-making systems are increasingly embedded in critical areas of governance such as housing allocation, welfare distribution, recruitment, healthcare, and immigration. While these systems promise efficiency and scalability, they often operate as opaque “black boxes,” producing decisions that lack explainability or recourse for affected citizens. This opacity undermines public trust and accountability in digital governance.

This review paper examines global efforts toward Responsible AI and highlights the urgent need for citizen-led auditing mechanisms that operationalize fairness, transparency, and accountability in practice. Drawing insights from recent literature on algorithmic transparency, fairness auditing, and privacy-preserving governance frameworks, the paper identifies key gaps—namely the absence of citizen-sourced evidence pipelines, cross-domain bias mapping, and measurable audit effectiveness.

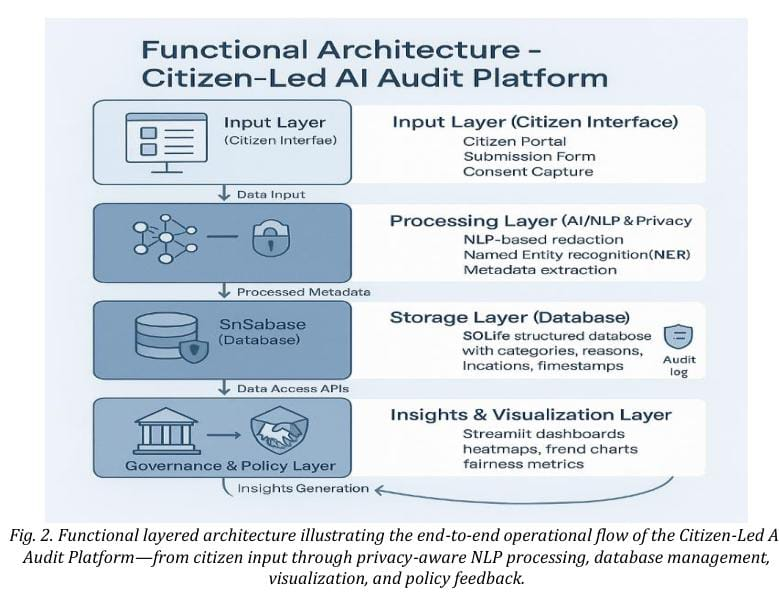

A conceptual framework and layered functional architecture are proposed to integrate citizen reporting, NLP-based anonymization, structured metadata storage, and visualization dashboards for systemic bias detection. The study bridges theoretical Responsible-AI principles with practical citizen-centric accountability models, offering a scalable foundation for participatory and ethical AI governance.