Explainable AI for Autonomous Vehicles: Interpretable Decision Making

Main Article Content

Abstract

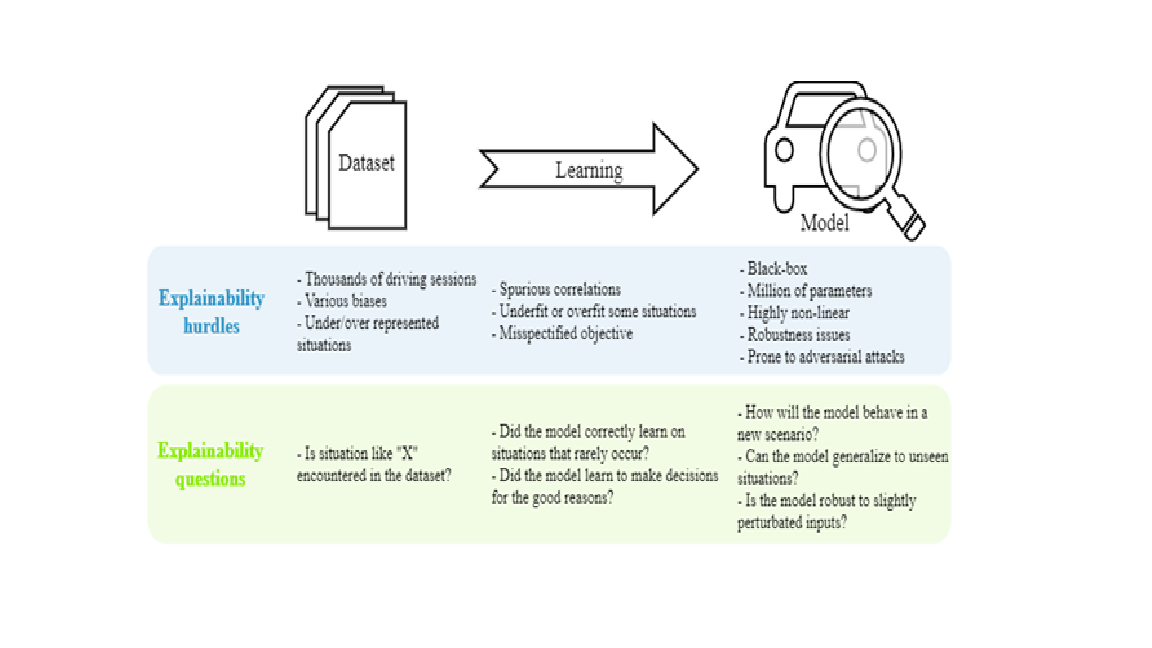

Explainable AI (XAI) has emerged as a critical area of research in the development of autonomous vehicles to enhance their interpretability and trustworthiness. In this paper, we explore the importance of interpretable decision-making in autonomous vehicles and investigate various techniques and methodologies that contribute to achieving explainability in AI-driven systems. We delve into the challenges associated with implementing XAI in autonomous vehicles, such as the need for transparency in decision-making processes and the balance between model complexity and interpretability. Furthermore, we discuss the potential benefits of explainable AI, including improved safety, regulatory compliance, and user acceptance. By analyzing existing literature and case studies, we provide insights into the state-of-the-art XAI techniques applied to autonomous vehicles and highlight future research directions in this domain. Through this comprehensive review, we aim to underscore the significance of explainable AI for ensuring the safe and reliable operation of autonomous vehicles in real-world scenarios.